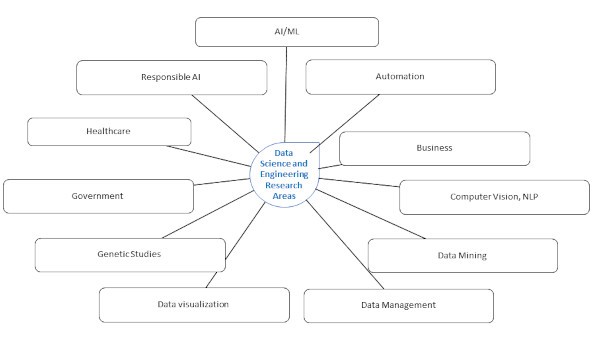

Data Science and Engineering: Research Areas

Data science has emerged as an independent domain in the decade starting 2010 with the explosive growth in big data analytics, cloud, and IoT technology capabilities. A data scientist requires fundamental knowledge in the areas of computer science, statistics, and machine learning, which he may use to solve problems in a variety of domains. We may define data science as a study of scientific principles that describe data and their inter-relationship. Some of the current areas of research in Data Science and Engineering are categorized and enumerated below :

1. Artificial Intelligence / Machine Learning:

While human beings learn from experience, machines learn from data and improve their accuracy over time. AI applications attempt to mimic human intelligence by a computer, robot, or other machines. AI/ML has brought disruptive innovations in business and social life. One of the emerging areas in AI is generative artificial intelligence algorithms that use reinforcement learning for content creation such as text, code, audio, images, and videos. The AI based chatbot ‘ChatGPT’ from Open AI is a product in this line. ChatGPT can code computer programs, compose music, write short stories and essays, and much more!

2. Automation:

Some of the research areas in automation include public ride-share services (e.g., uber platform), self-driving vehicles, and automation of the manufacturing industry. AI/ML techniques are widely used in industries for the identification of unusual patterns in sensor readings from machinery and equipment for the detection or prevention of malfunction.

3. Business:

As we know, social media provide opportunities for people to interact, share, and participate in numerous activities in a massive way. A marketing researcher may analyze this data to gain an understanding of human sentiments and behavior unobtrusively, at a scale unheard of in traditional marketing. We come across personalized product recommender systems almost every day. Content-based recommender systems guess user’s intentions based on the history of their previous activities. Collaborative recommender systems use data mining techniques to make personalized product recommendations, during live customer transactions, based on the opinions of customers with similar profile.

Data science finds numerous applications in finance like stock market analysis; targeted marketing; and detection of unusual transaction patterns, fraudulent credit card transactions, and money laundering. Financial markets are complex and chaotic. However, AI technologies make it possible to process massive amounts of real-time data, leading to accurate forecast and trade. Stock Hero, Scanz, Tickeron, Impertive execution, and Algoriz are some of the AI based products for stock market prediction.

4. Computer Vision and NLP:

AI/ML models are extensively used in digital image processing, computer vision, speech recognition, and natural language processing (NLP). In image processing, we use mathematical transformations to enhance an image. These transformations typically include smoothing, sharpening, contrasting, and stretching. From the transformed images we can extract various types of features - edges, corners, ridges, and blobs/regions. The objective of computer vision is to identify objects (or images). To achieve this, the input image is processed, features are extracted, and using the features the object is classified (or identified).

Natural language processing techniques are used to understand human language in written or spoken form and translate it to another language or respond to commands. Voice-operated GPS systems, translation tools, speech-to-text dictation, and customer service chatbots are all applications of NLP. Siri, and Alexa are popular NLP products.

5. Data Mining

Data mining is the process of cleaning and analyzing data to identify hidden patterns and trends that are not readily discernible from a conventional spread sheet. Building models for classification and clustering in high dimensional, streaming, and/or big data space is an area that receives much attention from researchers. Network-graph based algorithms are being developed for representing and analyzing the interactions in social media such as facebook, twitter, linkedin, instagram, and web sites.

6. Data Management:

Information storage and retrieval is area that is concerned with effective and efficient storage and retrieval of digital documents in multiple data formats, using their semantic content. Government regulations and individual privacy concerns necessitate cryptographic methods for storing and sharing data such as secure multi-party computation, homomorphic encryption, and differential privacy.

Data-stream processing needs specialized algorithms and techniques for doing computations on huge data that arrive fast and require immediate processing – e.g., satellite images, data from sensors, internet traffic, and web searches. Some of the other areas of research in data management include big data databases, cloud computing architectures, crowd sourcing, human-machine interaction, and data governance.

7. Data visualization

Visualizing complex, big, and / or streaming data, such as the onset of a storm or a cosmic event, demands advanced techniques. In data visualization, the user usually follows a three-step process - get an overview of the data, identify interesting patterns, and drill-down for final details. In most cases, the input data is subjected to mathematical transformations and statistical summarizations. The visualization of the real physical world may be further enhanced using audio-visual techniques or other sensory stimuli delivered by technology. This technique is called augmented reality. Virtual reality provides a computer-generated virtual environment giving an immersive experience to the users. For example, ‘Pokémon GO’ that allows you play the game Pokémon is an AR product released in 2016; Google Earth VR is VR product that ‘puts the whole world within your reach’.

8. Genetic Studies:

Genetic studies are path breaking investigation of the biological basis of inherited and acquired genetic variation using advanced statistical methods. The human genome project (1990 – 2003) produced a genome sequence that accounted for over 90% of the human genome. The project cost was about USD 3 billion. The data underlying a single human genome sequence is about 200 gigabytes. The digital revolution has made astounding possibilities to pinpoint human evolution with marked accuracy. Note that the cost of sequencing the entire genome of a human cell has fallen from USD 100,000,000 in the year 2000 to USD 800 in 2020!

9. Government:

Governments need smart and effective platforms for interacting with citizens, data collection, validation, and analysis. Data driven tools and AI/ML techniques are used for fighting terrorism, intervention in street crimes, and tackling cyber-attack. Data science also provides support in rendering public services, national and social security, and emergency responses.

10. Healthcare:

The most important contribution of data science in the pharmaceutical industry is to provide computational support for cost effective drug discovery using AI/ML techniques. AI/ML supports medical diagnosis, preventive care, and prediction of failures based on historical data. Study of genetic data helps in the identification of anomalies, prediction of possible failures and personalized drug suggestions, e.g., in cancer treatment. Medical image processing use data science techniques to visualize, interrogate, identify, and treat deformities in the internal organs and systems.

Electronic health records (EHR) are concerned with the storage of data arriving in multiple formats, data privacy (e.g., conformance with HIPAA privacy regulations), and data sharing between stakeholders. Wearable technology provides electronic devices and platforms for collecting and analyzing data related to personal health and exercise – for example, Fitbit and smartwatches. The Covid-19 pandemic demonstrated the power of data science in monitoring and controlling an epidemic as well as developing drugs in record time.

11. Responsible AI:

AI systems support complex decision making in various domains such as autonomous vehicles, healthcare, public safety, HR practices etc. To trust the AI systems, their decisions must be reliable, explainable, accountable, and ethical. There is ongoing research on how these facets can be built into AI algorithms.

This book appears in the book series Transactions on Computer Systems and Networks. If you are interested in writing a book in the series, then please

About the author

Srikrishnan Sundararajan, PhD in Computer Applications, is a retired senior professor of business analytics, Loyola institute of business administration, Chennai, India. He has held various tenured and visiting professorships in Business Analytics, and Computer Science for over 10 years. He has 25 years of experience as a consultant in the information technology industry in India and the USA, in information systems development and technology support.

He is the author of the forthcoming book published by ¹ú²úÂÒÂ× (ISBN.9789819903528). This book offers a comprehensive first-level introduction to data science including python programming, probability and statistics, multivariate analysis, survival analysis, AI/ML, and other computational techniques.